What is the Kids Online Safety Act (KOSA)?

It’s no secret that children and teenagers are more glued to their screens than ever before. Children ages 8 to 12 spend an average of over 5 hours per day on their screens, while teenagers log over 8 hours every day. When your child is online, they are the product, and Big Tech is trying every method possible to keep them scrolling, clicking ads, and sharing every detail of their life.

In today’s age of social media, the statistics on mental health issues among youth are staggering. Almost half of U.S. teens have experienced bullying or harassment online. Between 2010 and 2019, teen depression rates doubled, with teenage girls seeing the sharpest increase. In 2021, almost a third of girls said they seriously considered attempting suicide.

Since Big Tech refuses to protect our children, it’s time for Congress to step in.

The Kids Online Safety Act Will Keep Our Children Safer

The bipartisan Kids Online Safety Act (KOSA), which is supported by over half of the U.S. Senate, provides young people and parents with the tools, safeguards, and transparency they need to protect against online harms. The bill requires social media platforms to put the well-being of children first by providing an environment that is safe by default.

Specifically, the Kids Online Safety Act:

Requires social media platforms to provide minors with options to protect their information, disable addictive product features, and opt out of personalized algorithmic recommendations.

Platforms are required to enable the strongest privacy settings for kids by default.

Gives parents new controls to help protect their children and spot harmful behaviors, and provides parents and educators with a dedicated channel to report harmful behavior.

Creates a duty for online platforms to prevent and mitigate specific dangers to minors, including promotion of suicide, eating disorders, substance abuse, sexual exploitation, and advertisements for certain illegal products (e.g. tobacco and alcohol).

Ensures that parents and policymakers know whether online platforms are taking meaningful steps to address risks to kids by requiring independent audits and research into how these platforms impact the well-being of kids and teens.

Bipartisan Support for KOSA

The Kids Online Safety Act Has Broad Bipartisan Support

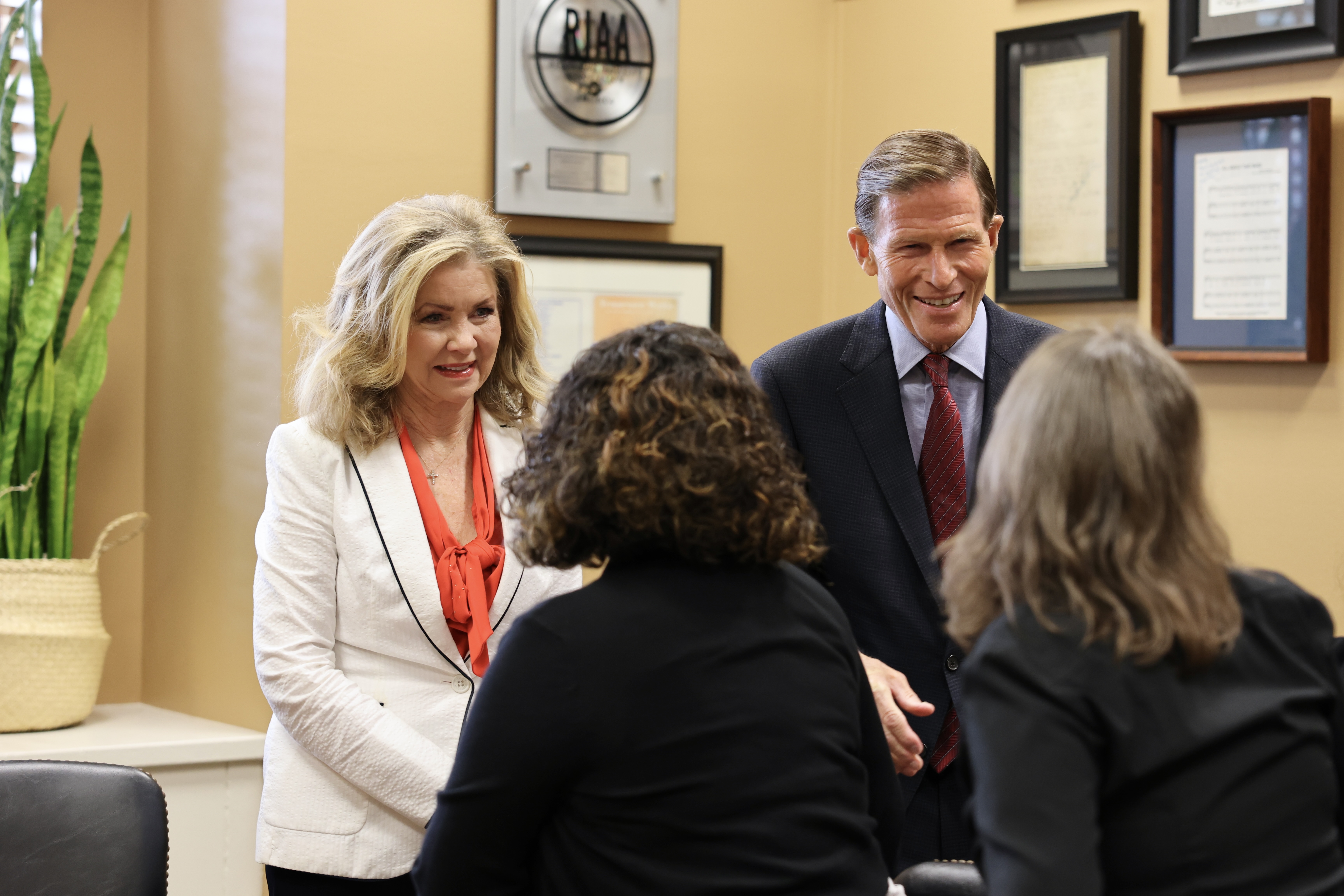

Senators Blackburn and Blumenthal first introduced the Kids Online Safety Act in February 2022 following reporting by the Wall Street Journal and after spearheading a series of five subcommittee hearings with social media companies and advocates on the repeated failures by tech giants to protect kids on their platforms. Senators Blackburn and Blumenthal have stood their ground in the face of aggressive lobbying and flagrant lies from Big Tech companies, uniting 62 members of the U.S. Senate and gathering the endorsements of over 240 organizations, including parents, child safety advocates, tech experts, faith leaders, pediatricians, and child psychologists in support of the legislation.

Fact vs. Fiction

No, the Kids Online Safety Act does not give state Attorneys General or FTC the power to bring lawsuits over content or speech. The Kids Online Safety Act would not censor, block, or remove any content from the internet.

The Kids Online Safety Act targets the harms that online platforms cause through their own product and business decisions – like how they design their products and applications to keep kids online for as long as possible, train their algorithms to exploit vulnerabilities, and target children with advertising.

Additionally, the Kids Online Safety Act does not amend Section 230 of the Communications Decency Act, which provides immunity to online platforms for third-party content. As a result, any lawsuits brought by the FTC over the content that online platforms host are likely to be quickly tossed out of court.

Finally, the bill includes a specific, express provision ensuring that a company cannot be liable for providing content to young users when the user has searched for that content.

The “duty of care” requires social media companies to prevent and mitigate certain harms that they know their platforms and products are causing to young users as a result of their own design choices, such as their recommendation algorithms and addictive product features. The specific covered harms include suicide, eating disorders, substance use disorders, and sexual exploitation.

For example, if an app knows that its constant reminders and nudges are causing its young users to obsessively use their platform, to the detriment of their mental health or to financially exploit them, the duty of care would allow the FTC to bring an enforcement action. This would force app developers to consider ahead of time where theses nudges are causing harms to kids, and potentially avoid using them.

Companies in every other industry in America are required to take meaningful steps to prevent users of their products from being hurt, and this simply extends that same kind of responsibility to social media companies, too.

Importantly, not all platforms have the same functionality or business model, so the duty of care forces online platforms to consider and address the negative impacts of their specific product or service on younger users, without imposing rules that may not work for each website or service.

The duty of care only applies to a fixed and clearly established set of harms, and sets a high standard for what online platforms can be held accountable for.

This specific list of harms includes medically-recognized mental health disorders (suicidal behaviors, eating disorders, and substance use disorders), addictive use, illicit drugs, and federally-defined child sexual exploitation crimes.

The FTC – which has the power to enforce the duty of care – cannot add or change the harms covered under the bill.

No, the Kids Online Safety Act does not make online platforms liable for the content they host or choose to remove.

Additionally, the bill includes a specific, express provision ensuring that a company cannot be liable for providing content to young users when the user has searched for that content.

No, the Kids Online Safety Act actually includes an express provision ensuring that a company cannot be liable for providing content to young users when the user has searched for that content. And, in order to ensure that mental health support services are protected and encouraged, the bill provides explicit protections for those services (ex. National Suicide Hotline, substance abuse organizations, or LGBTQ youth centers).

That means that a social media platform doesn’t face any legal liability when users log onto their platforms and seek out helpful resources, like the National Suicide Hotline or information about how to treat substance abuse.

No, the Kids Online Safety Act does not impose age verification requirements or require platforms to collect more data about users (government IDs or otherwise).

In fact, the bill states explicitly that it does not require age gating, age verification, or the collection of additional data from users.

Under the Kids Online Safety Act, if an online platform already knows that a user is underage, then it has to provide the safety and privacy protections required by the legislation—the platform cannot bury its head in the sand when it knows a user is underage.

Online platforms often already request a date of birth from new users, either for advertising and profiling the user, or for compliance with Children's Online Privacy Protection Act (COPPA). Online platforms also frequently collect or purchase substantial amounts of other data to understand more about their users.

But if an online platform truly doesn’t know the age of the user, then it does not face any obligation to provide protections or safeguards under the bill or to collect more data in order to determine the user’s age.

No, teenage users don’t need permission to create new accounts or go online. The bill does require permissions and safeguards for young children, those under the age of 13, similar to existing law under COPPA.

No, the Kids Online Safety Act doesn’t require the disclosure of private information such as browsing history, messages, or friends lists, to parents or other users.

What the Kids Online Safety Act does do is create an obligation for platforms and apps to provide safeguards and tools to parents and kids. These guardrails are focused on protecting kids’ privacy, preventing addictive use, and disconnecting users from recommendation systems. Parental tools – such as the ability to restrict purchases and financial transactions, as well as to view and control privacy and account settings or metrics of total time spent on the platform – are turned on by default for children (under age 13). They are provided only as an option for teens (aged 13 to 16) to turn on as families choose.

The bill also includes additional protections and guardrails on the parents’ tools to protect the privacy of minors, such as requiring platforms to notify teens and children when parental controls are enabled.

No, the Kids Online Safety Act only covers social media, social networks, multiplayer online video games, social messaging applications, and video streaming services. It does not include blogs or personal websites.

No, websites run by non-profits organizations – which often host important and valuable educational and support services – are not covered by the scope of the legislation.

In The News

Photos & Videos

Click here for photos and b-roll of KOSA related activities.

Social Media